Your Markov decision process example images are ready in this website. Markov decision process example are a topic that is being searched for and liked by netizens today. You can Download the Markov decision process example files here. Get all royalty-free images.

If you’re looking for markov decision process example images information connected with to the markov decision process example interest, you have come to the ideal site. Our site frequently provides you with suggestions for seeking the maximum quality video and image content, please kindly search and locate more informative video content and graphics that match your interests.

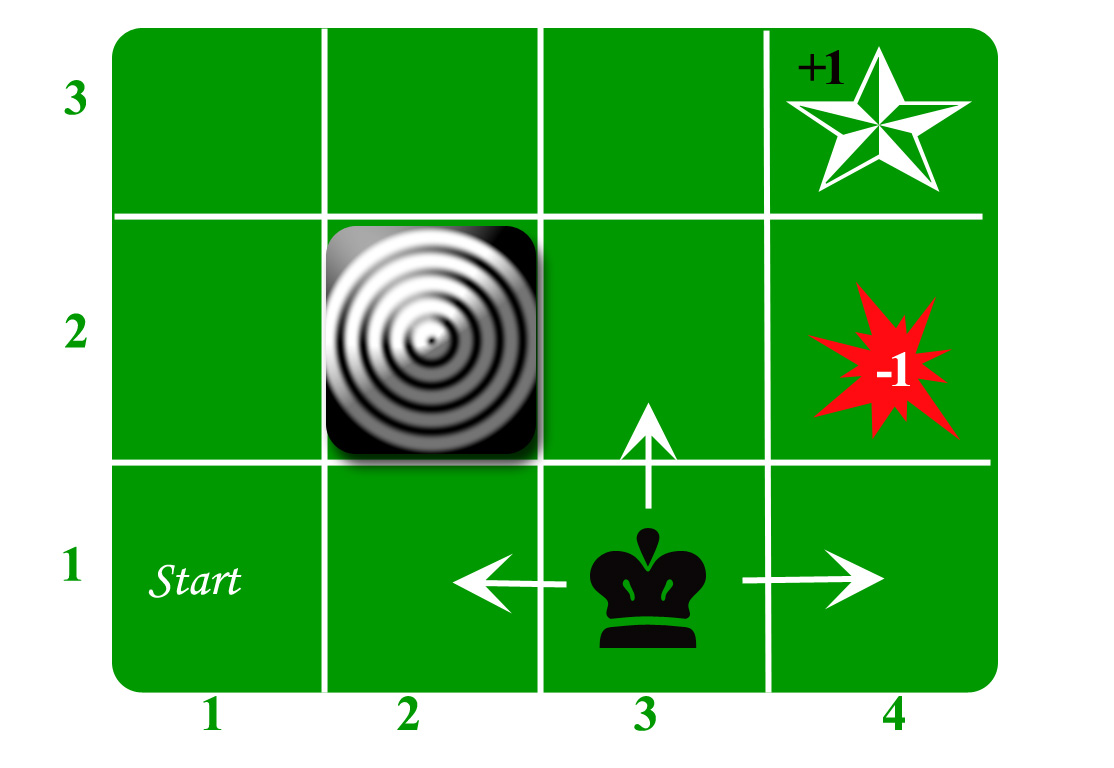

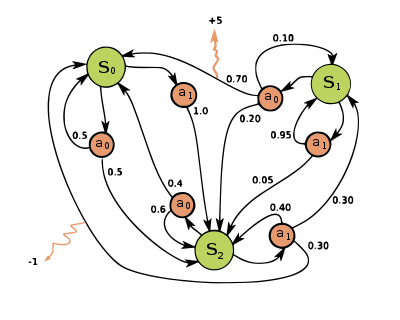

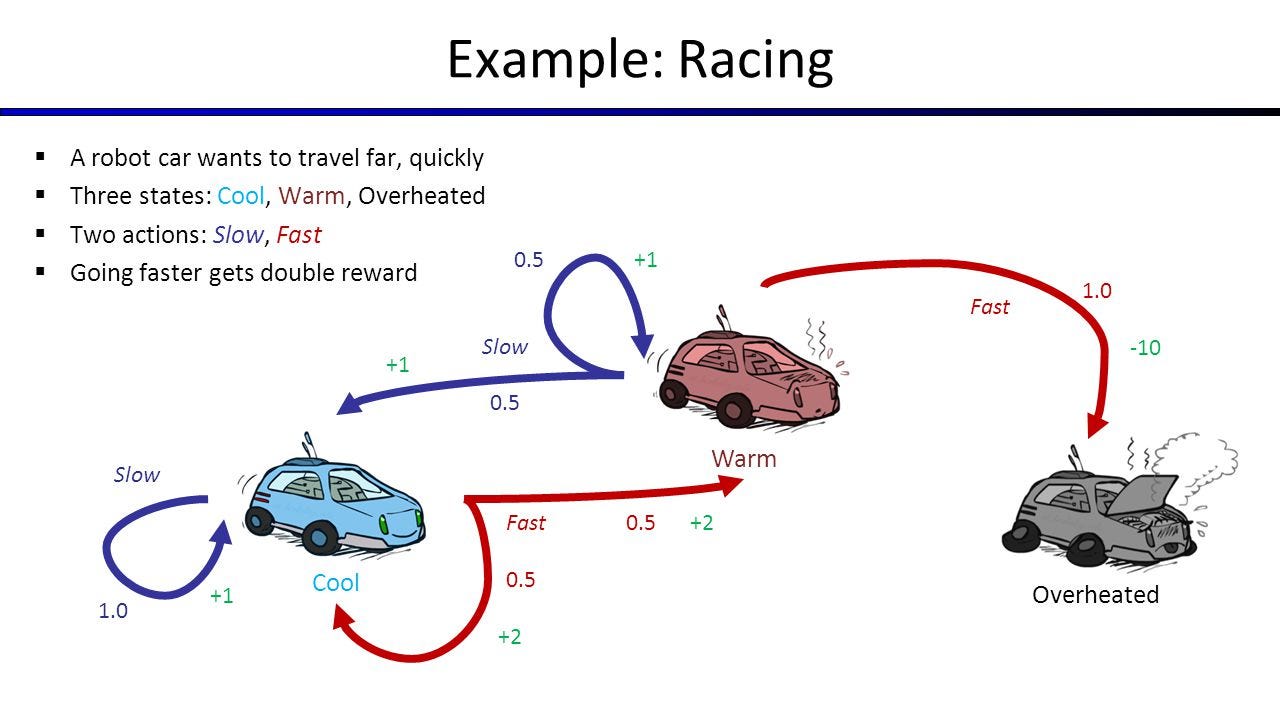

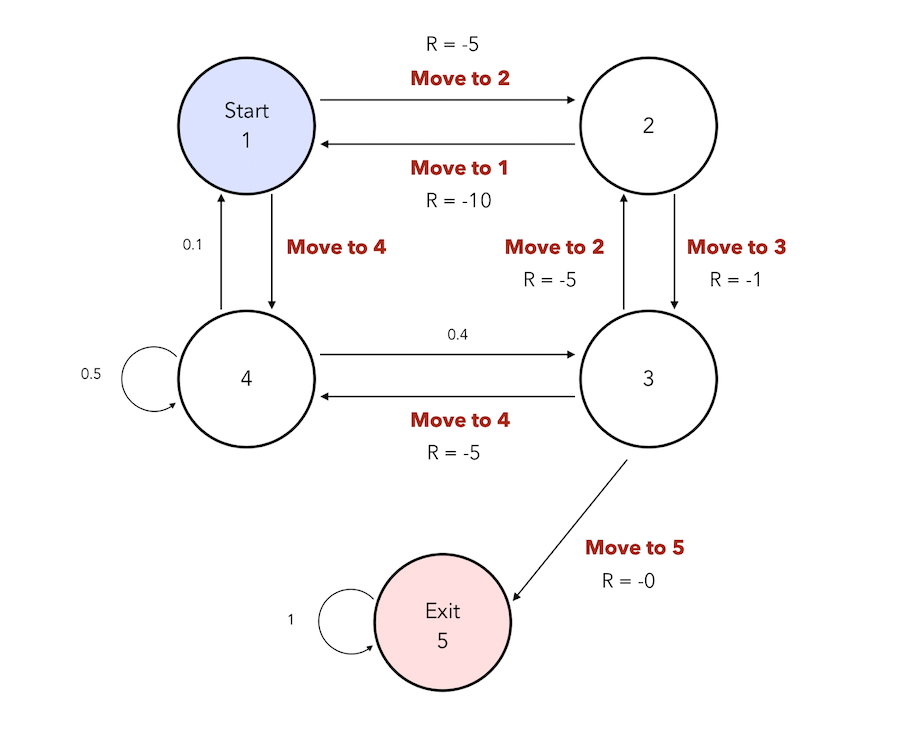

Markov Decision Process Example. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. 3 Definition 1 Discrete-time Markov decision process Let AP be a finite set of atomic propositions. A set of possible actions A. Agent gets these rewards in these cells goal of agent is to maximize reward Actions.

Markov Decision Process Mdp Toolbox For Matlab From cs.ubc.ca

Markov Decision Process Mdp Toolbox For Matlab From cs.ubc.ca

Grid world example 1-1 Rewards. Two state POMDP becomes a four state markov chain. These are a bit more elaborate than a simple example model but are probably of interest since they are applied examples. A set of possible world states S A set of possible actions A A real valued reward function Rsa A description Tof each actions effects in each state. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. A set of Models.

Available functions forest A simple forest management example rand A random example small A very small example mdptoolboxexampleforestS3 r14 r22 p01 is_sparseFalse source Generate a MDP example based on a simple forest management scenario.

Markov Decision Processes Markov Processes Introduction Introduction to MDPs Markov decision processes formally describe an environment for reinforcement learning Where the environment is fully observable ie. Finally for sake of completeness we collect facts. Can then have a search process to find finite controller that maximizes utility of POMDP Next Lecture Decision Making As An Optimization. This is why they could be analyzed without using MDPs. MARKOV PROCESSES 3 1. The examples in unit 2 were not influenced by any active choices everything was random.

Source: youtube.com

Source: youtube.com

MDP allows formalization of sequential decision making where actions from a state not just influences the immediate reward but also the subsequent state. Invest 2-3 Hours A Week Advance Your Career. Finally for sake of completeness we collect facts. The situation is here as follows. Agent gets these rewards in these cells goal of agent is to maximize reward Actions.

Source: geeksforgeeks.org

Source: geeksforgeeks.org

Reinforcement Learning. It is assumed that all state spaces Sn are finite or countable and that all reward functions rn and gN are bounded from above. Since the demand for a product is random a warehouse will. Markov decision processes 2. We assume the Markov Property.

Source: towardsdatascience.com

Source: towardsdatascience.com

It is assumed that all state spaces Sn are finite or countable and that all reward functions rn and gN are bounded from above. Two state POMDP becomes a four state markov chain. Ad Build your Career in Data Science Web Development Marketing More. Recall that stochastic processes in unit 2 were processes that involve randomness. Markov Decision Process MDP is a foundational element of reinforcement learning RL.

Source: sciencedirect.com

Source: sciencedirect.com

Read the TexPoint manual before you delete this box. Markov Decision Processes Framework Markov chains MDPs Value iteration Extensions Now were going to think about how to do planning in uncertain domains. The environment in return provides rewards and a new state based on the actions of the agent. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. They are Markov models of the COVID-19 pandemic projecting hospitalizations ICU needs case counts and deaths under different mitigation strategies.

Source: researchgate.net

Source: researchgate.net

A set of possible world states S A set of possible actions A A real valued reward function Rsa A description Tof each actions effects in each state. Invest 2-3 Hours A Week Advance Your Career. Discrete-Time Markov Decision Processes Let S be a finite or countable set. Read the TexPoint manual before you delete this box. Agent gets to observe the state.

Source: wikiwand.com

Source: wikiwand.com

A set of possible world states S. Markov Decision Processes MDP Example. Finally for sake of completeness we collect facts. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. Its an extension of decision theory but focused on making long-term plans of action.

Source: neptune.ai

Source: neptune.ai

It is a very useful framework to model problems that maximizes longer term return by taking sequence of. By Mapping a finite controller into a Markov Chain can be used to compute utility of finite controller of POMDP. Invest 2-3 Hours A Week Advance Your Career. MARKOV PROCESSES 3 1. Ad Build your Career in Data Science Web Development Marketing More.

Source: medium.com

Source: medium.com

These are a bit more elaborate than a simple example model but are probably of interest since they are applied examples. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. Can then have a search process to find finite controller that maximizes utility of POMDP Next Lecture Decision Making As An Optimization. Left right up down take one action per time step actions are stochastic. Agent gets these rewards in these cells goal of agent is to maximize reward Actions.

Source: temi-babs.medium.com

Source: temi-babs.medium.com

British Gas currently has three schemes for quarterly payment of gas bills namely. A set of possible actions A. Agent gets to observe the state. Markov Decision Processes MDP Example. The Amazing Goods Company Supply Chain Consider an example of a supply chain problem which can be formulated as a Markov Decision Process.

Source: cs.ubc.ca

Source: cs.ubc.ca

Markov theory is only a simplified model of a complex decision-making process. Markov decision processes 2. AAAAAAAAAAA Drawing from Sutton and Barto Reinforcement Learning. Markov Decision Processes MDP Example. Only go in intended direction 80 of the time States.

Source: researchgate.net

Source: researchgate.net

The environment in return provides rewards and a new state based on the actions of the agent. Finally for sake of completeness we collect facts. Value Iteration Policy Iteration Linear Programming Pieter Abbeel UC Berkeley EECS TexPoint fonts used in EMF. Markov decision processes Markov decision problem Examples 1. We denote the set of all distributions on S by DistrS.

Source: towardsdatascience.com

Source: towardsdatascience.com

British Gas currently has three schemes for quarterly payment of gas bills namely. Agent gets to observe the state. Read the TexPoint manual before you delete this box. By Mapping a finite controller into a Markov Chain can be used to compute utility of finite controller of POMDP. 1 chequecash payment 2 credit card debit 3 bank account direct debit.

Source: study.com

Source: study.com

Markov-Decision Process Part 1 In a typical Reinforcement Learning RL problem there is a learner and a decision maker called agent and the surrounding with which it interacts is called environment. Available functions forest A simple forest management example rand A random example small A very small example mdptoolboxexampleforestS3 r14 r22 p01 is_sparseFalse source Generate a MDP example based on a simple forest management scenario. Invest 2-3 Hours A Week Advance Your Career. The examples in unit 2 were not influenced by any active choices everything was random. Reinforcement Learning.

Source: stats.stackexchange.com

Source: stats.stackexchange.com

Since the demand for a product is random a warehouse will. Read the TexPoint manual before you delete this box. Markov Decision Processes Value Iteration Pieter Abbeel UC Berkeley EECS TexPoint fonts used in EMF. The current state completely characterises the process Almost all RL problems can be formalised as MDPs eg. Can then have a search process to find finite controller that maximizes utility of POMDP Next Lecture Decision Making As An Optimization Problem.

Source: slideplayer.com

Source: slideplayer.com

A real-valued reward function Rsa. Markov Decision Processes and Exact Solution Methods. Recall that stochastic processes in unit 2 were processes that involve randomness. MDP allows formalization of sequential decision making where actions from a state not just influences the immediate reward but also the subsequent state. Finally for sake of completeness we collect facts.

Source: bosem.in

Source: bosem.in

Well start by laying out the basic framework then look at Markov. Recall that stochastic processes in unit 2 were processes that involve randomness. The examples in unit 2 were not influenced by any active choices everything was random. Markov decision processes I add input or action or control to Markov chain with costs I input selects from a set of possible transition probabilities I input is function of state in standard information pattern 3. A Markov Decision Process MDP model contains.

Source: maelfabien.github.io

Source: maelfabien.github.io

Value Iteration Policy Iteration Linear Programming Pieter Abbeel UC Berkeley EECS TexPoint fonts used in EMF. The situation is here as follows. Well start by laying out the basic framework then look at Markov. The Amazing Goods Company Supply Chain Consider an example of a supply chain problem which can be formulated as a Markov Decision Process. The examples in unit 2 were not influenced by any active choices everything was random.

Source: researchgate.net

Source: researchgate.net

Available functions forest A simple forest management example rand A random example small A very small example mdptoolboxexampleforestS3 r14 r22 p01 is_sparseFalse source Generate a MDP example based on a simple forest management scenario. 1 chequecash payment 2 credit card debit 3 bank account direct debit. A policy the solution of Markov Decision Process. AAAAAAAAAAA Drawing from Sutton and Barto Reinforcement Learning. By Mapping a finite controller into a Markov Chain can be used to compute utility of finite controller of POMDP.

This site is an open community for users to do submittion their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site beneficial, please support us by sharing this posts to your preference social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title markov decision process example by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.